Meta Human Digital Mummers

Dover Mummers Play – MetaHuman Performance Diary

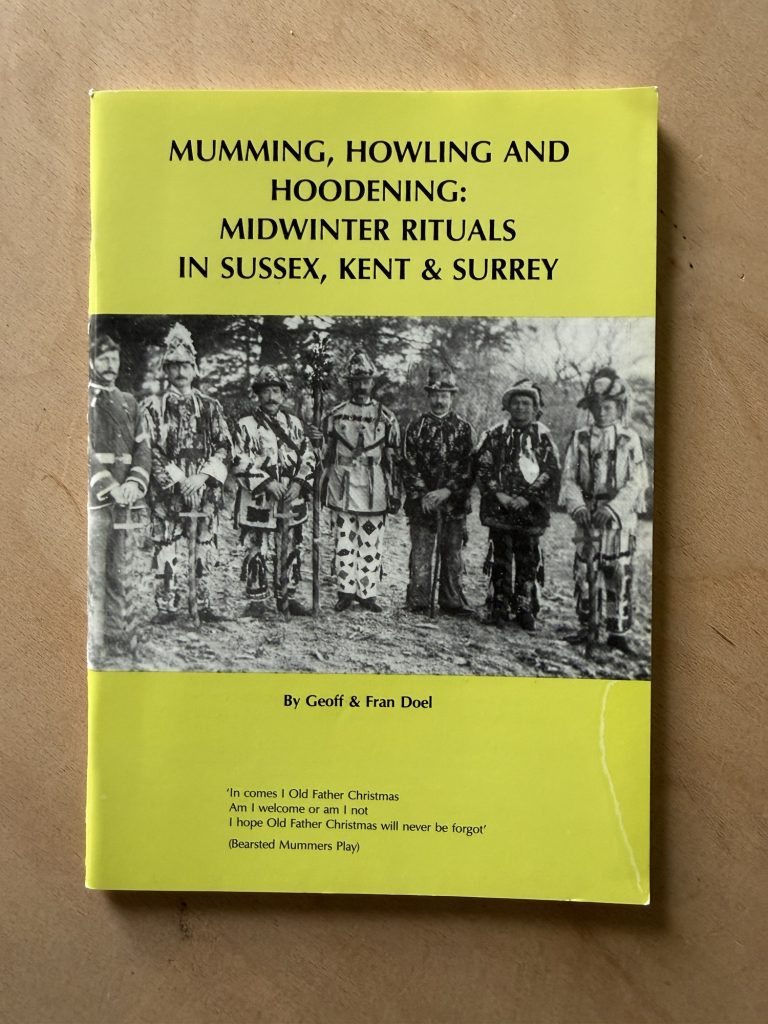

This entry documents the development of a workflow for recording, processing, and re-animating performance data for Dover Mummers Play using Unreal Engine 5.6 and MetaHuman technology. The aim is to capture and reinterpret intangible heritage performance—specifically Kentish Mummers and Hoodening traditions—through digital character work.

Context and Approach

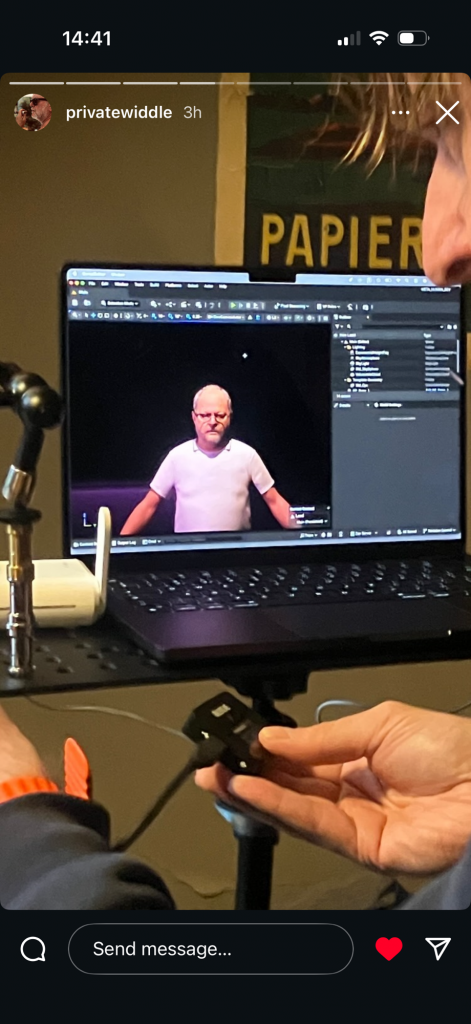

Working with Peter Cocks, I have been building a practical method of recording performers with the iPhone Live Link Face app, generating MetaHuman performances, and integrating these into Unreal. The goal is to establish a repeatable archival process: one that allows performances to be captured quickly on location, stored reliably, and later refined and rendered in high quality.

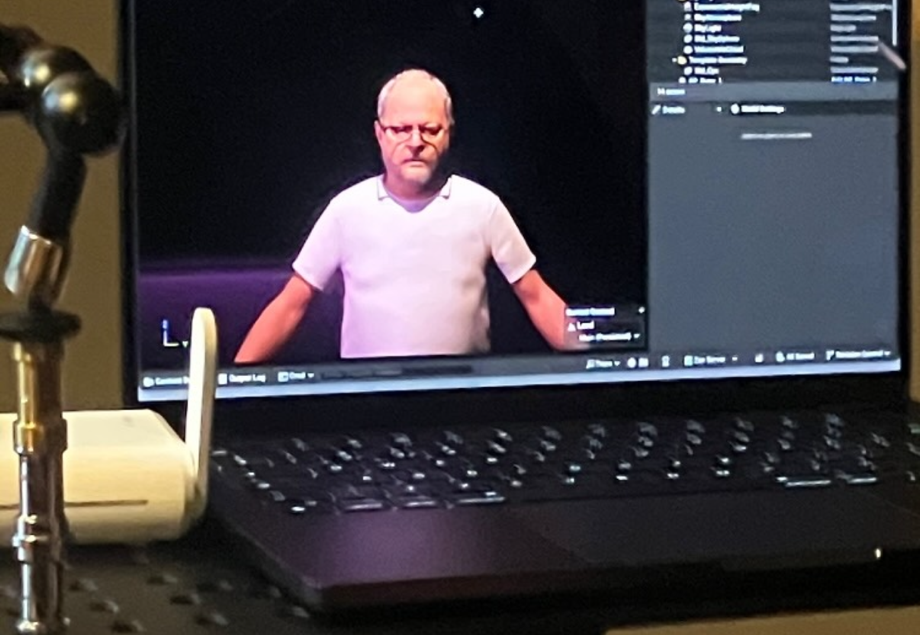

One advantage of this workflow is portability. Using the Live Link Face app with a small wireless microphone setup allows me to capture strong-quality material without bringing a full laptop rig. The iPhone alone records high-quality video and audio, making it ideal for fieldwork. When connected to the laptop, Live Link provides a real-time preview of the actor driving the MetaHuman, which is valuable for testing and direction, but the portable capture mode is less intrusive for recording final material.

Scanning and Creating the MetaHuman

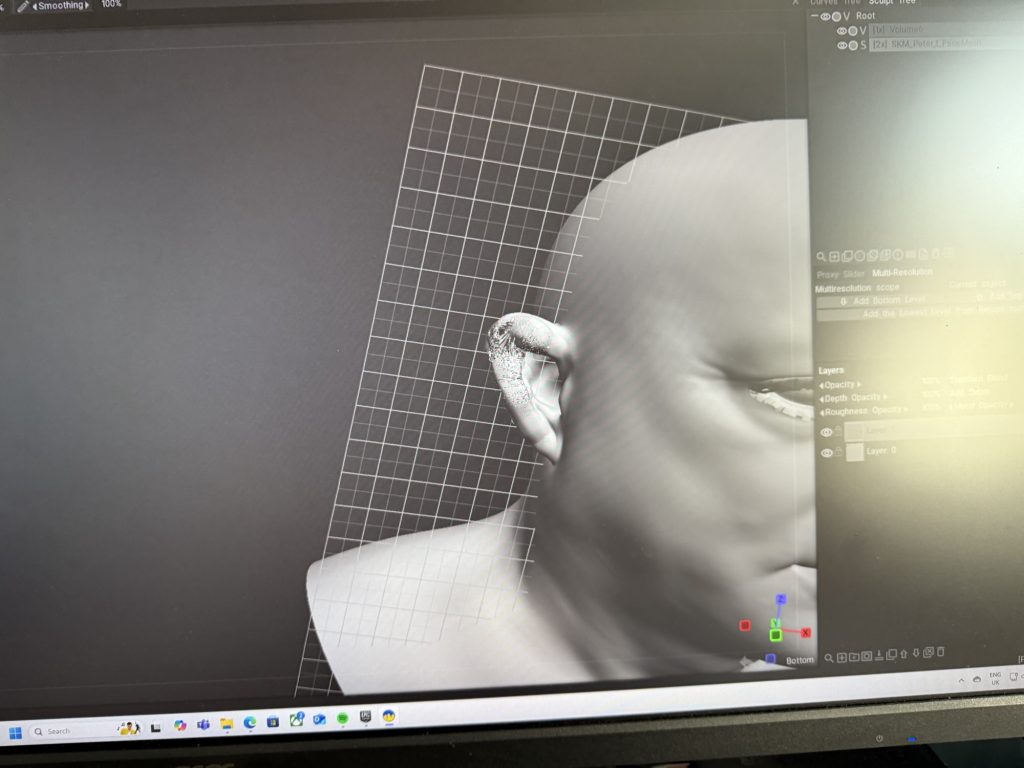

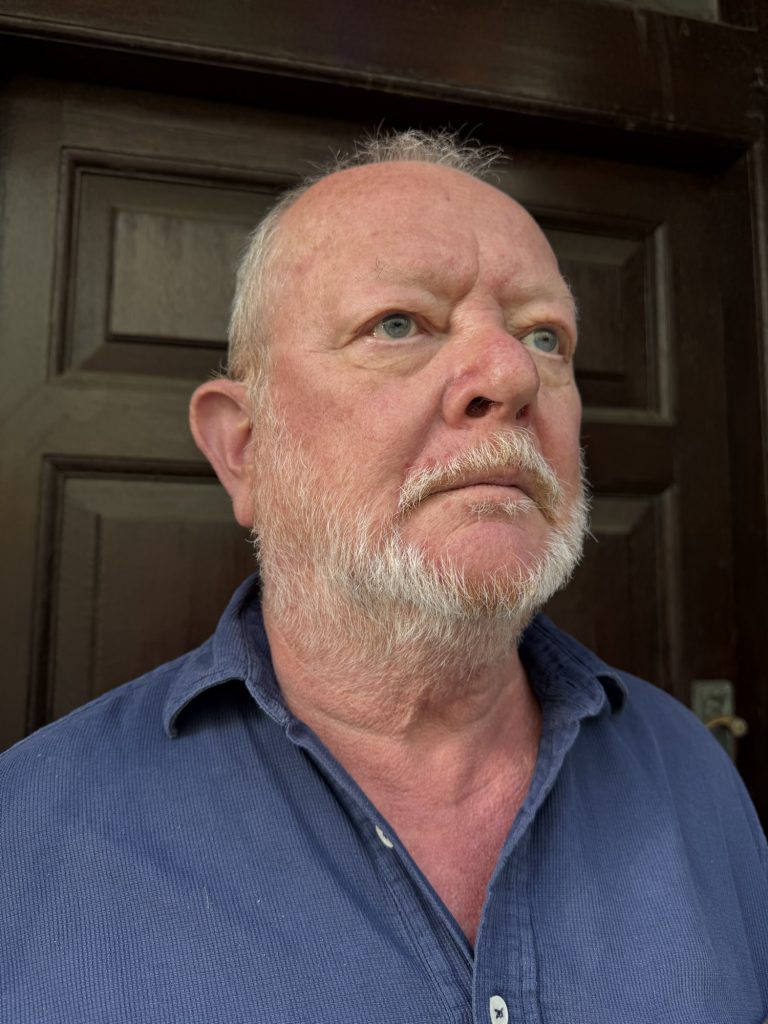

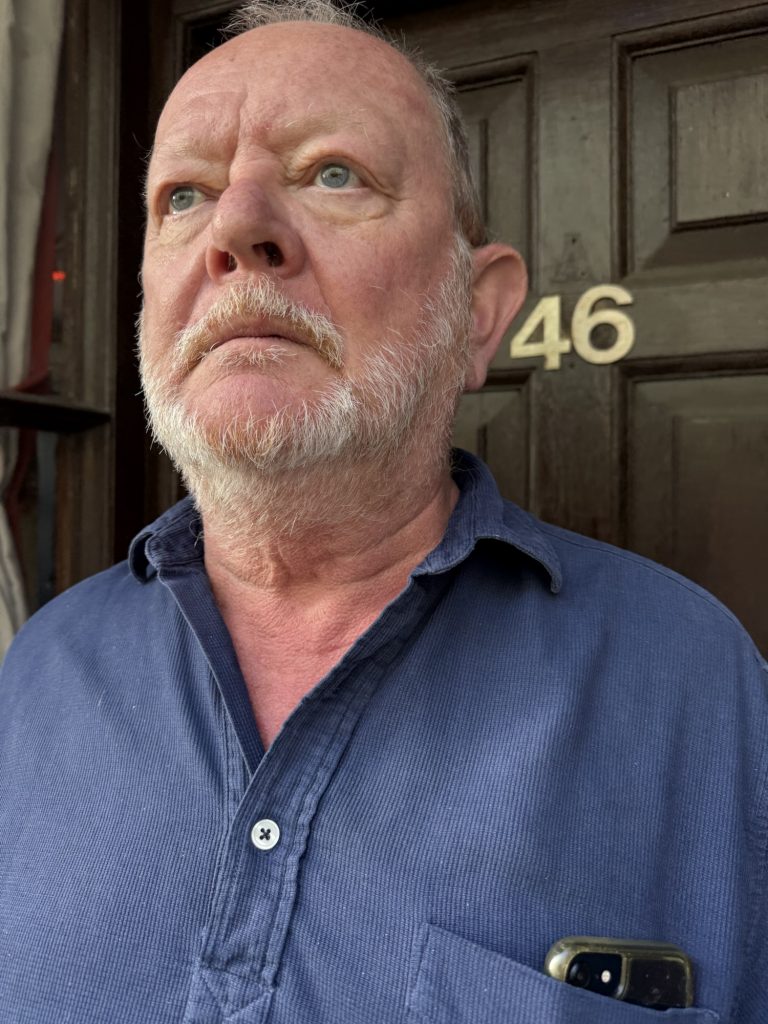

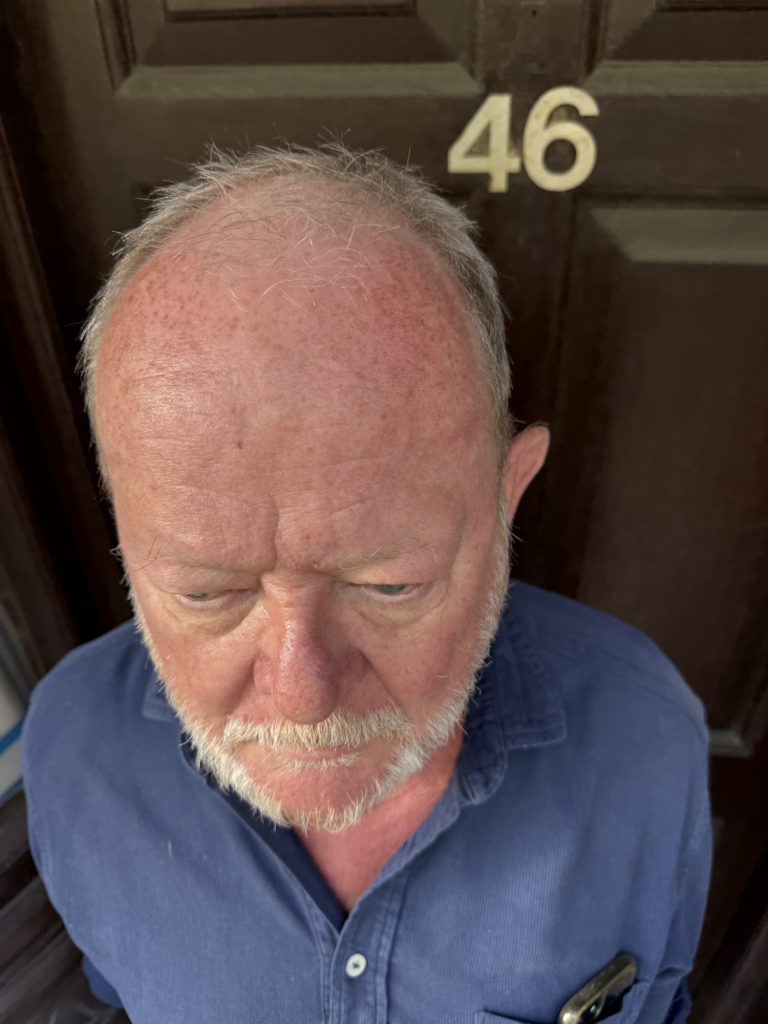

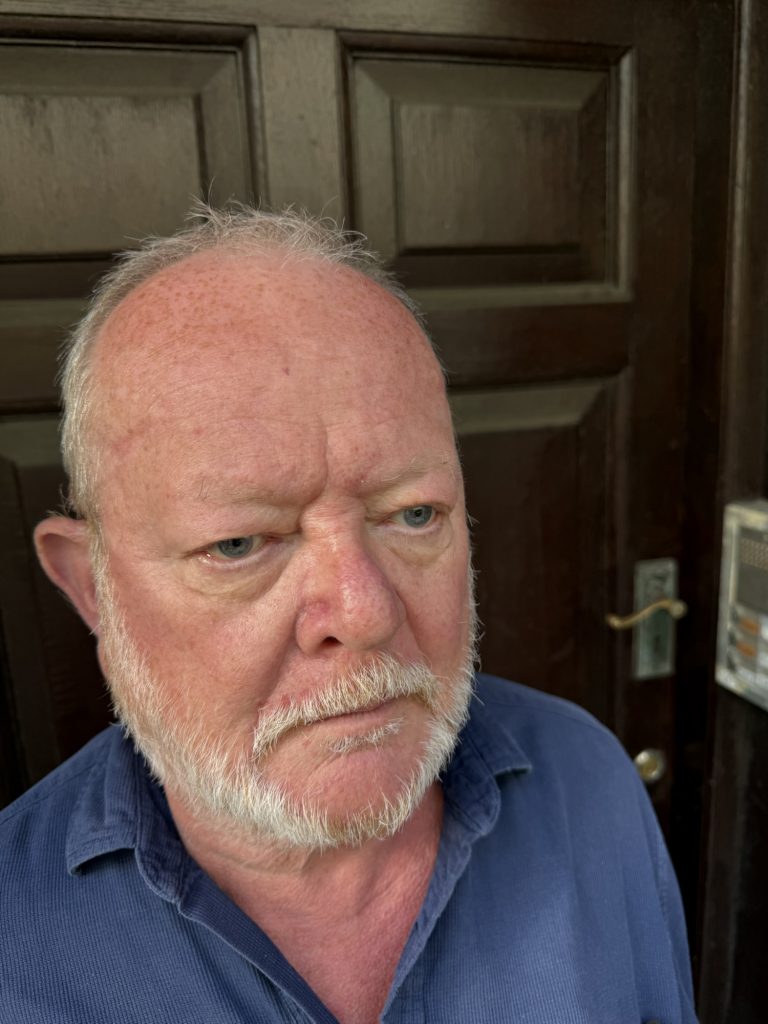

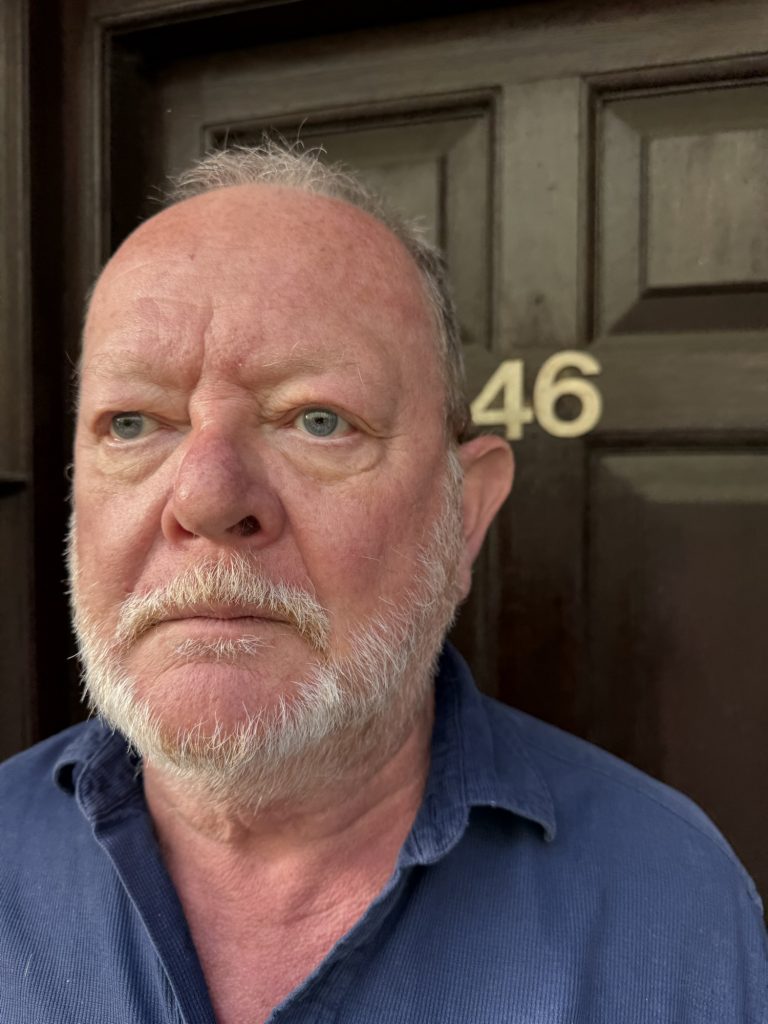

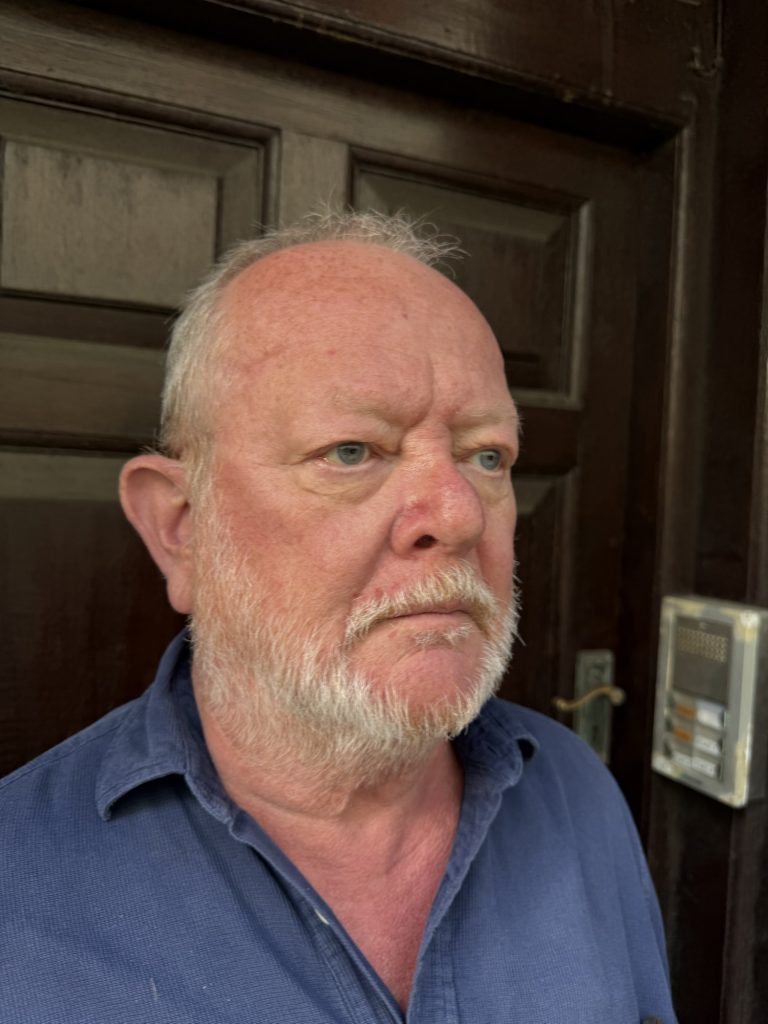

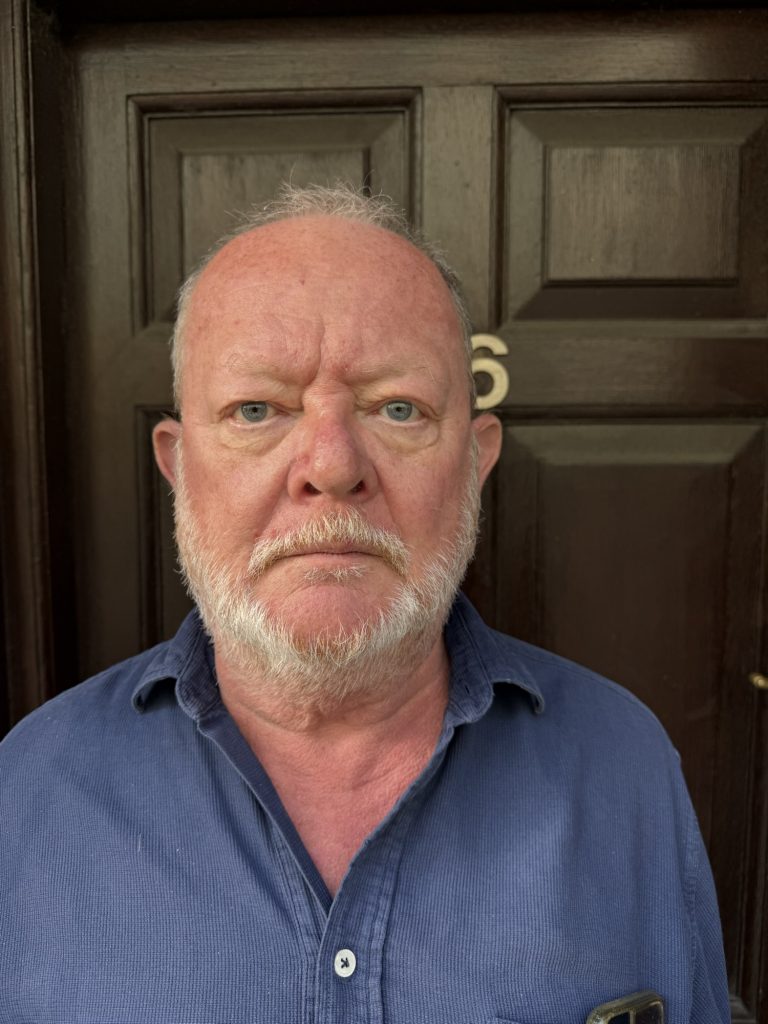

To build Peter’s MetaHuman, I created a 3-D scan using photogrammetry from the images above .

however in future I would use the Scanner App on an iPhone, relying on the TrueDepth front-facing camera. This approach captures more accurate facial geometry than image-based photogrammetry, though it is slightly awkward since you cannot see the scan progress while moving around the subject. Once completed, the scan was refined in MetaHuman Creator and exported to Unreal Engine 5.6, generating a blueprint called Peter_MetaHuman_01.

Live Link for Real-Time Preview

The Live Link connection allows the actor’s facial movements to be visualised on the MetaHuman in real time.

Connect the iPhone and Unreal workstation to the same local network (I use a dedicated router on site). In the Live Link Face app, add the computer’s local IP under Live Link Targets and allow local network access. In Unreal, open Window → Live Link and confirm the device appears as a subject. In the MetaHuman actor’s Details panel, under Live Link, set the subject name to match the device name. Ensure the visualisation mesh used for preview is Peter’s facial skeletal mesh from his MetaHuman blueprint.

This setup is only for live preview or live recording via the take recorder in unreal engine. the archival process uses the footage recorded on the phone for later ingest.

Live Link vs ARKit

I use Live Link rather than ARKit. ARKit follows a different ingest and archival process and is not as well aligned with current Unreal workflows. Live Link integrates directly through Live Link Hub and Monocular Video processing and is likely to remain the supported route in future releases.

Archival Ingest and Processing

Copy recorded takes from the iPhone to the NAS archive. In Unreal, open Live Link Hub (Window tools)→ Virtual Production → Live Link Hub). Point to the folder containing the recordings. Select Monocular Video. Ignore the depth data; it is not currently used in this process. When the takes appear, right-click inside each take folder and choose Create → MetaHuman Performance Asset.

Unreal processes the video and tracking data to generate the performance animation.

MetaHuman Performance Settings

In the MetaHuman Performance Asset panel, confirm:

Skeletal Mesh: select Peter’s facial skeletal mesh (from Peter_MetaHuman_01). Control Rig: enable in the right-hand Sections menu. Video Source: set to Monocular Video.

Once processed, Unreal creates an animation asset linked to the MetaHuman’s control rig. This produces accurate facial motion and can be previewed directly on the MetaHuman in the viewport.

Exporting for the Master Sequence

After processing, export the resulting animation as an image sequence or video file. The image-sequence method gives the best quality and makes later frame correction easier.

When the export dialog appears, deselect both “Image Plane” and “Camera.” These elements belong to the preview setup only. Keeping them would display the original reference video in every sequence and generate multiple camera tracks when imported into the master project. Exporting without them produces a clean performance sequence containing only the animation and audio.

Keep all related files—video reference, sequence, and MetaHuman Performance Asset—together inside the same take folder so the data remains self-contained and portable.

Building the Master Sequence

Create a master sequence called Dover Mummers Play. Bring each processed take in as a clean subsequence.

Do not include cameras, cuts, or lighting setups inside subsequences. In the master sequence, add a single CineCameraActor, configure lighting, and bind it through a Camera Cuts Track.

With this layout, all MetaHumans align correctly in 3D space and playback remains stable.

Rendering

Render the master sequence through Movie Render Queue as either a movie file or an image sequence + audio. If errors occur, check for stray cameras or duplicate tracks in the subsequences.

Reflection

This workflow combines real-time visualisation via Live Link with a clean archival ingest pipeline. Selecting the correct facial skeletal mesh, using Monocular Video with Control Rig enabled, and exporting without the preview camera or image plane ensures each sequence stays clean for integration into the master timeline.

Next steps include refining a teleprompter setup for improved eyelines, testing dual-character scenes, and integrating archival voice material from historical Hoodening and Mumming plays. The broader aim is a sustainable method for living digital heritage—where traditional performance practices continue through virtual production